#40 - Deceptive clarity

Have you ever learned a new framework and become excited about how it might solve a complex problem at work? Things may suddenly feel clear when you get your hands on this new shiny tool, but I suggest you beware that such clarity may be deceptive.

Have you ever learned a new framework and become excited about how it might solve a complex problem at work? Things may suddenly feel clear when you get your hands on this new shiny tool, but I suggest you beware that such clarity may be deceptive.

To illustrate this point, I want to tell you a story about my experience doing product planning at Holistics. I'm also gonna talk about where I messed up. After all, this newsletter is about putting things into practice, and sometimes that means failures.

Don't worry though, it's not an oh-I-almost-got-fired kind of failure, so I'm not gonna be too dramatic.

Recently, I had an opportunity to do product planning for Holistics – my current employer. Although I’ve done product planning before, this was my first time doing so at Holistics since I joined over a year ago. My task as a squad lead was to identify product opportunities for 2025, given the strategic themes provided by management.

Context

For this story to make sense, let me briefly explain what my company and squad do. Holistics is a business intelligence platform that differentiates itself by incorporating DevOps best practices into the data development process.

My squad handles all things related to Analytics Modeling Querying Language (AMQL), an analytics language centered around metrics. AMQL is made up of two interconnected components: AML and AQL.

- AML is used by analysts to describe data semantic models and business metrics.

- AQL is used to query data at a higher level of abstraction.

AMQL is a core differentiator for Holistics, allowing analysts to apply best practices from software engineering to data engineering.

The messy reality

If this sounds technical, it’s because it truly is. While I’m no stranger to technical products, this one is a different beast.

In this story, I’ll focus on AQL. One obstacle to increasing AQL adoption is its steep learning curve. Users who are just getting started often run into numerous issues – some they can solve themselves, others they need our support for. If you’ve ever tried to learn a new programming language, you can probably relate.

Once they overcome the initial hurdles, however, they often praise AQL for enabling them to describe complex business metrics in just a few lines of code (whereas an equivalent task in SQL could easily require three times as much code). In that sense, there is a small but clear sign of Product-Market Fit for AQL.

But we suspect many people fail to get past that initial complexity and never realize AQL’s potential to do more with less. Why couldn't we be certain? That leads to another particular challenge with AQL: How do you track usage of a programming language?

Here's a few questions that we wrestled with:

- Do you send a tracking event on every keystroke? That seems excessive and most likely yields a massive volume of data you’d discard 90% of anyway.

- Do you send a tracking event after the user becomes idle for 3–5 seconds? Many text editors trigger autosave with such a threshold. But what if the user pauses for 5 seconds just to think? It often takes more time to seriously think about anything important.

- Do you send a tracking event every time they switch to a UI mode? Better, because switching modes might indicate wanting to preview code output. But what if they use a dual mode where code and UI are side by side?

Hopefully, these questions convey how tricky it is to track AQL usage. If you work on consumer products or even typical technical products, you might take data tracking for granted. That’s not the case with AQL.

High-leverage data points

After confronting with the messy reality, I decided to focus on data we already had. In particular, there were two high-leverage sources of data:

AQL formula evolution analysis. Despite the difficulties of tracking AQL as explained above, there were still an area where we could reasonably tell where an AQL formula begins and where it ends. It's when a user defines a new metric.

Imagine that you want to create a report where the total number of orders is displayed on a monthly basis as a consecutive set of bars. If we were to translate that into an analytics question, it would be: calculate the Total Order metric, and viewed it on a monthly timestamp dimension. You can in fact translate almost any business questions into an analytics problem by reframing them in terms of metrics and dimensions. I won't go into much details, you can learn about metrics and dimensions more here.

When users define a new metric in Holistics, they often define an AQL formula as a new field. They execute this field (which in turn executes the AQL formula and returns a result), repeating the process until the metric produces the correct result. Whenever they execute a field, we send a tracking event containing the AQL formula, a timestamp, and whether the formula executed successfully. This is the only place where AQL formula has a clear beginning and ending (compared to other places).

I extracted these time-series data from the last quarter and analyzed them, with help from AI. By approximating causation via correlation—grouping AQL formula executions within a one-hour window—we get a rough sense of how a user’s AQL formulas evolve over time. Admittedly, this is an approximation, because users can write more than one metric within that hour, so you can see two completely distinct AQL formulas being developed within that timeframe.

I spent days going through more than 300 one-hour data groupings and inferring what customers are trying to do. As time-consuming as it was, the exercise helped me build up fingertip feelings about how customers were using AQL. The most important thing before you try to do high-level work is to build ground-level abstractions, and you can't get any more grounded than reading individual formulas being executed.

Customer interactions. Holistics has a strong culture of customer-centricity. We interact with customers almost daily through discovery calls or support tickets. We don’t have a dedicated support team; every PM and Engineer cycles through support duty on a weekly basis. This ensures everyone stays close to customers.

This has a crucial implication: PMs build strong intuitions about customer problems and quickly know where to look for qualitative data. I’ve worked at companies that rarely talk to customers, making it tough to form accurate insights. In those places, by the time you need data, it’s often too late. Here, since we regularly talk to customers, I know exactly who our top customers are, the issues they face, and can back this up with data.

The problem with quantitive data is that you can't get to why people do certain things. When interacting with customers directly, you can probe deeper and understand what drive their behaviors. For example, sometimes people write a particular expression because they're trying to replicate how they'd approach a problem in SQL, oblivious to the fact that using the right AQL function can get the same job done much more easily.

These qualitative insights formed the second source of information for my product planning.

Simplify with learning gaps

After collecting the quantitative (tracking events) and qualitative (customer conversations) data that was within reach, the next step was to synthesize them. This is easily the most difficult task because there’s no one-size-fits-all approach.

At this point, I need to confess that this is where I messed up—though it wasn't catastrophic.

Typically, making products “usable” means reducing friction, but friction isn’t always the enemy – especially when it comes to learning. Different programming languages have different quirks which typically will require you to overcome pre-existing habits.

That realization nudged me to think in terms of learnability instead of usability. To figure out what that actually meant, I decided to look beyond product management into the realm of behavioral science. One book that shaped my perspective was Talk to the Elephant by Julie Dirksen, which discusses how to design learning experiences that drive behavioral change. That, in turn, led me to another excellent resource by Dirksen: Design for How People Learn.

In that book, she identifies several distinct types of learning gaps:

- Knowledge Gaps. In many learning situations, we assume the problem is a lack of information. But even if a learner memorizes all the relevant facts about a product or process, they won’t automatically become proficient. True learning occurs when they use that information to accomplish something.

- Skill Gaps. Having a skill is different from simply knowing something. If we suspect there’s a skill gap rather than just a knowledge gap, we should ask: “Is it reasonable to think someone can be proficient without practice?” If the answer is no, then we’re dealing with a skill gap that requires hands-on rehearsal.

- Motivation Gaps. Even if someone knows what to do, they won’t necessarily choose to do it. Maybe they don’t really buy into the outcome, or the destination doesn’t make sense, or they feel anxious about change. Whatever the reason, lack of motivation can halt learning in its tracks.

- Environment Gaps. Sometimes the environment itself isn’t set up for success. If you want someone to change their behavior, do they have the necessary resources, rewards, or infrastructure? Even the best training can fail if the learner’s surroundings don’t support their new actions.

- Communication Gaps. Often, people struggle to perform because of unclear or ineffective directions. The person doing the teaching (or designing the product) knows where they want the learner to go, but can’t communicate that path in a way that the learner can follow.

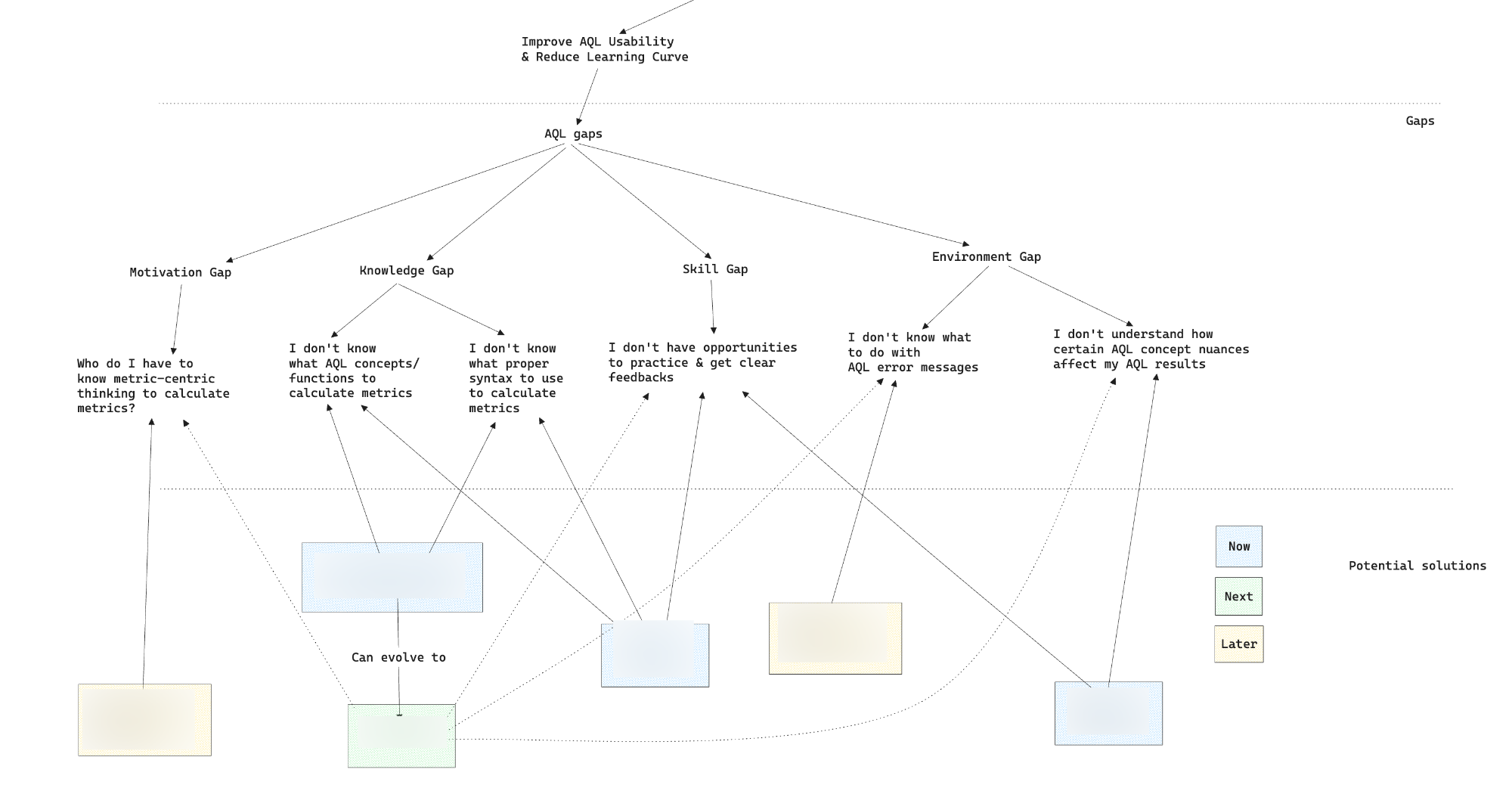

I realized that we can evaluate AQL learnability issues through these learning gaps.

But this clarity isn't really because you've seen through a situation, it's because you're neglecting what makes a situation tricky. That's why it's deceptive. Learning to recognize deceptive clarity is an ongoing process that takes continual investment and maintenance.

I wasn't doing it intentionally, but I definitely made some leaps that couldn't be properly explained. Here's to name some of these leaps:

- Motivation Gaps.

- Looking at data, some users just straight up wrote SQL code where they should have written AQL.

- They were surely influenced by SQL, but I made the leap that they chose to write in SQL because they didn't see why they had to learn AQL. In other words, they don't have strong motivations to learn about AQL.

- It could be because they don't get why they have to learn a new paradigm. However, these are speculations at best. It's a possibility I considered because the term Motivation Gap was there in the framework. In fact, the data itself didn't obviously back up this reasoning.

- I'm not saying it's impossible, but it's unsupported by data.

- Knowledge Gaps vs Skill Gaps.

- Can you clearly distinguish between knowledge gaps and learning gaps?

- Theoretically, if someone learns all the fundamental concepts in AQL, it doesn't mean they can map a real-world complex analytic problem into AQL functions and syntax.

- But that's really a thin line, because you can argue that's only because they haven't really bridged the knowledge gap. This was brought up during the product review as well, and talking about them wasn't particularly helpful.

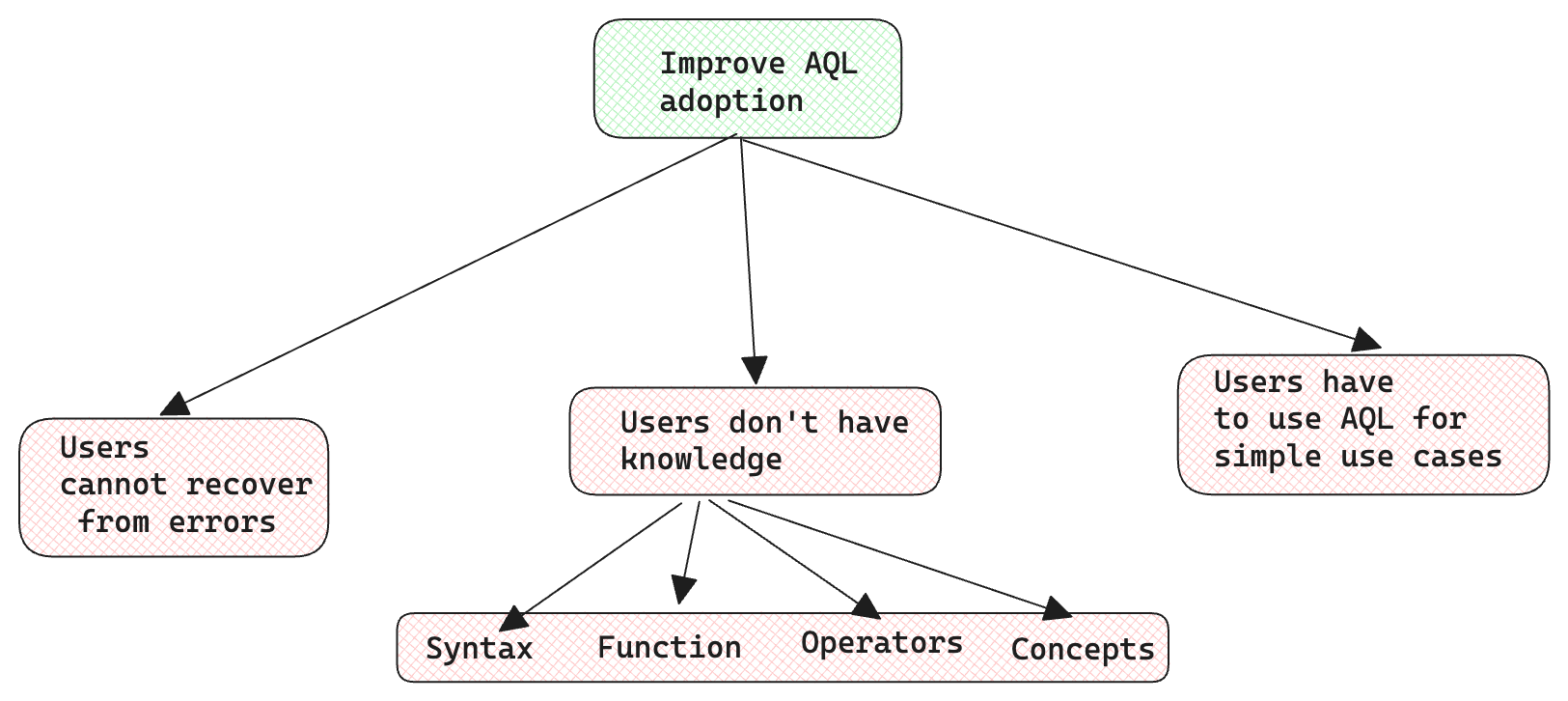

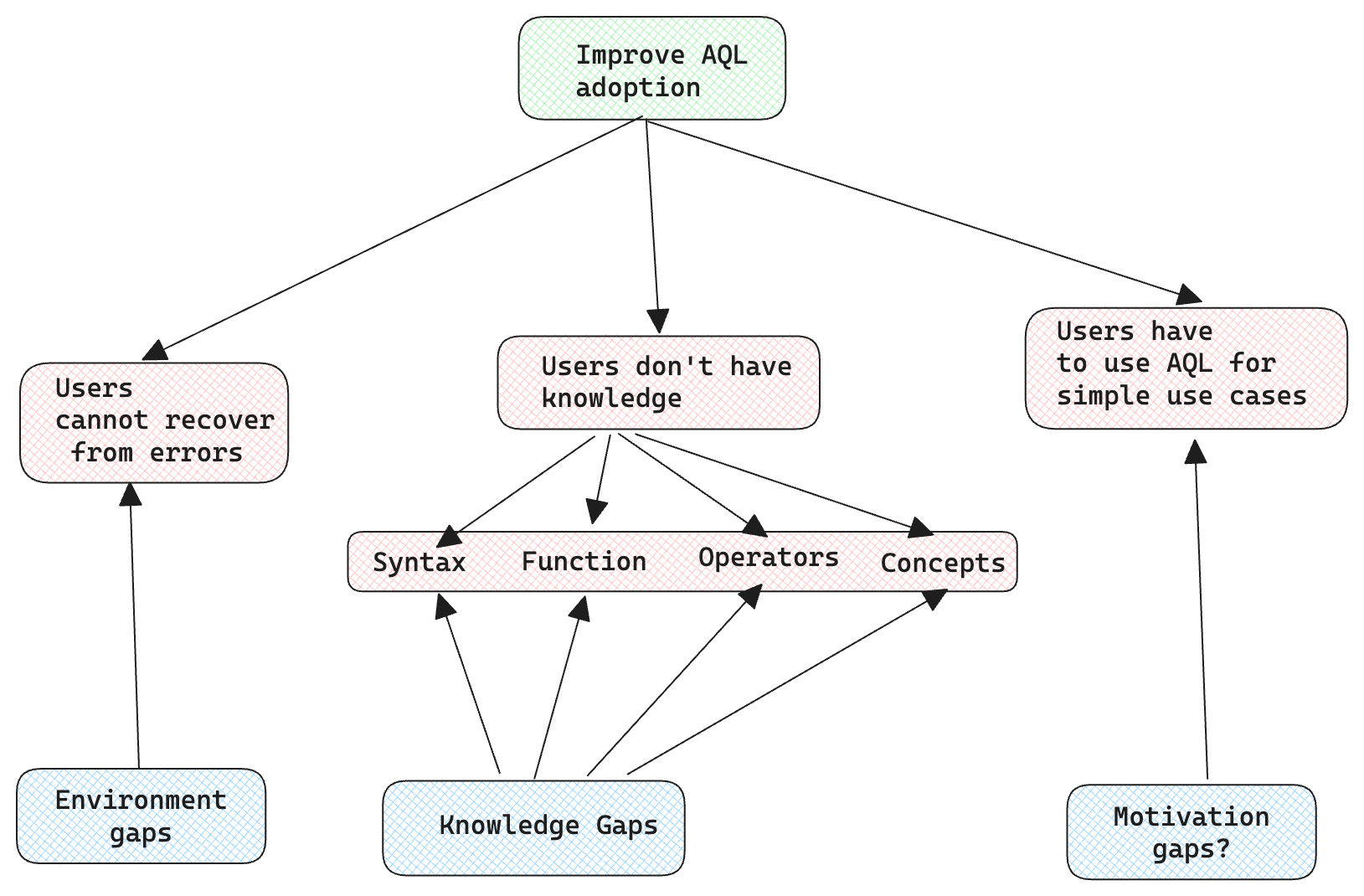

In other words, some parts definitely fit, but other parts didn't. After discussing with the team, we aligned on the following problems to focus on:

I have removed references to knowledge gaps, motivation gaps or environment gaps. But they still sorta apply, just not in a top-down fashion.

- Environment gaps.

- Assisting in recovering from errors is definitely a characteristic of the software environment. Data showed that customers clearly struggled to understand error messages and didn't know what to do.

- Knowledge gaps.

- Customers used incorrect functions, syntax, operators or concepts when writing AQL. This is backed up by the AQL formula evolution analysis above. These are straightforward knowledge gaps.

- Motivation gaps.

- Customers are using AQL for simple use cases, but we designed it to handle more complex calculations. So ideally customers should have other ways to accomplish these simple use cases. "Simple things should be simple" is a key tenet in Holistics' product development principles.

- Is this a motivation gap? I don't know, it could be that their motivations rise to match the complexity of the task at hand, so they have some motivation gaps in learning AQL just for basic stuff. I've put a question mark here because I'm not sure.

Regardless of some of the leaps that I made, the data analysis that I brought to the table was really useful. I tapped into the right high-leverage data points and they helped guide the discussion with management to eventual alignment. Without data, you can only rely on intuition and speculations, which might be right but not suitable for alignment. PMs have to get people on the same page so that the team can operate at maximum efficiency, so alignment isn't simply a matter of consensus.

I fumbled a bit at simplifying, because I was trying to overfit the messy reality into a neat framework. It would have been awesome if I clearly saw when things don't fit, but that's easier said than done. I did what I could to the best of my ability, and it turned out great, so I'm not brooding too much.

Conclusion

“Deceptive Clarity” is a really nice title. But it's also a reminder that tidy frameworks can make us feel more certain than we actually are. They simplify reality but risk glossing over the nuances that matter most. In my AQL product planning at Holistics, I leaned too heavily on a framework at first, only to realize the real insights emerged when I dug into messy data and talked to customers directly.

The takeaway? Frameworks are helpful, but they don’t replace hands-on discovery and open-minded exploration. Let reality be your teacher. Sometimes, fumbling is part of finding the right path.

If you enjoyed these reflections, feel free to subscribe to my newsletter for more stories from the product trenches—no spam, unsubscribe anytime.

Interactive quiz

Understanding Deceptive Clarity

Question 1 of 6